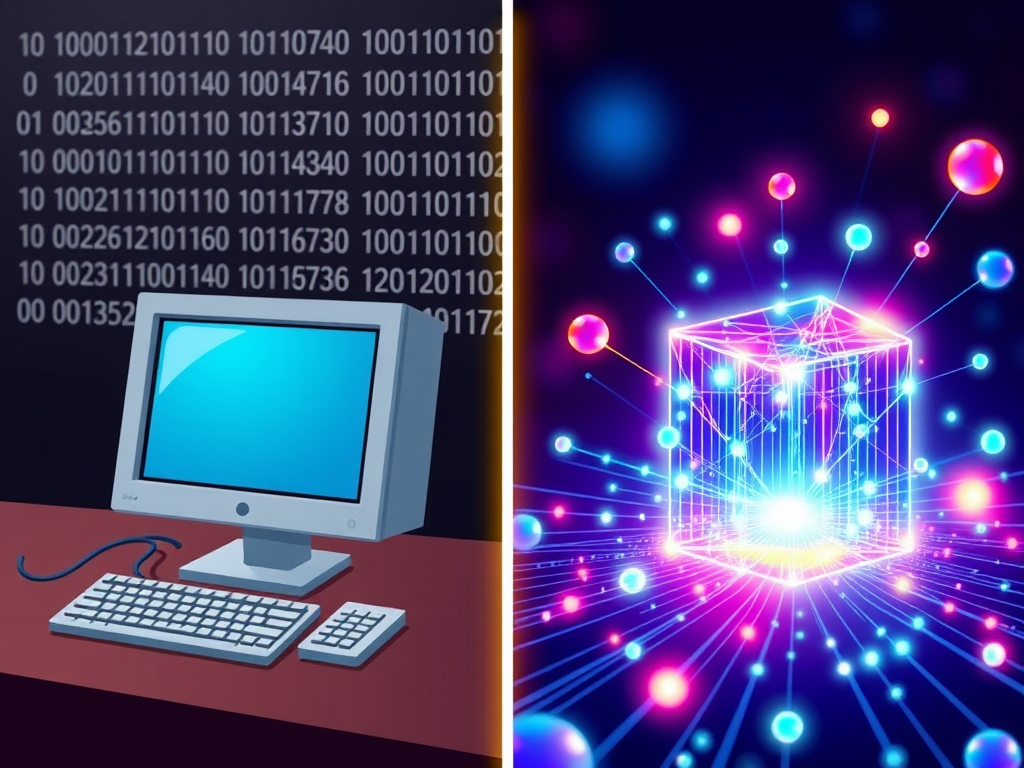

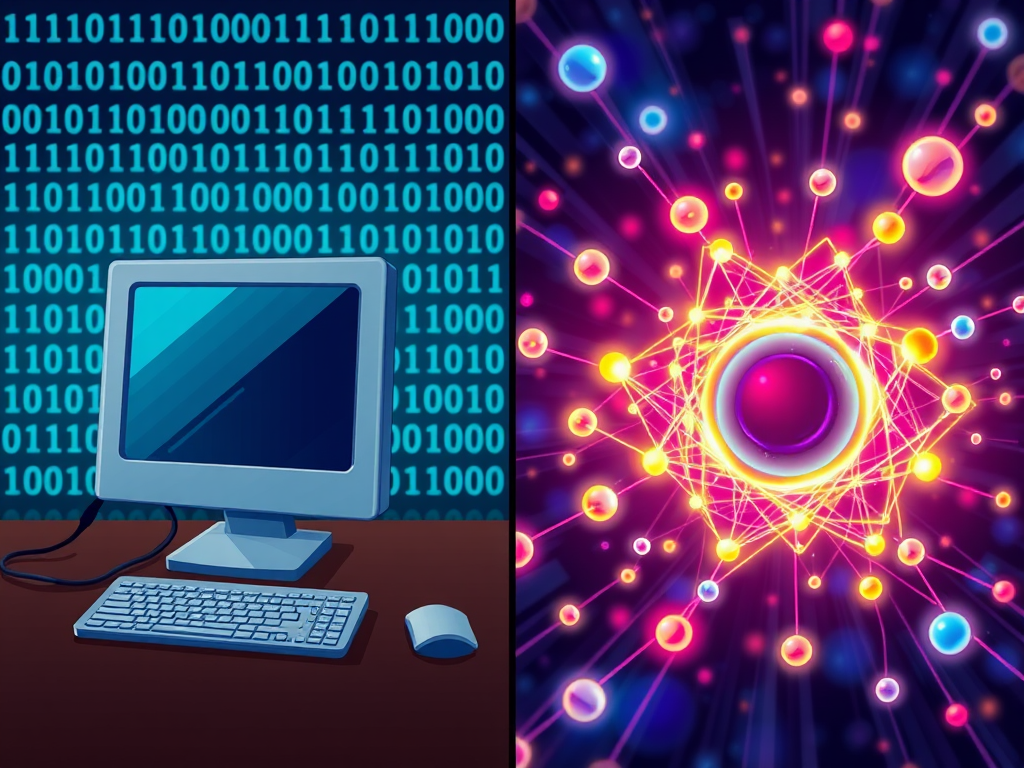

As technology changes, the way we process information has also evolved. Two major types of computing are classical and quantum computing. Understanding the differences between these two is important for grasping the future of technology.

Classical Computing

Classical computing is the traditional way of processing information. It’s the type of computing we have used for decades and has several key features:

1. Basic Units of Information

Classical computers use bits as the smallest unit of information. A bit can be either 0 or 1. These bits combine to form bytes, which help us store and process data. This system allows classical computers to perform many tasks, from simple calculations to handling large amounts of data.

2. Processing Power

The speed and performance of classical computers depend on their clock speed and the number of transistors (tiny switches) in their processors. Modern classical computers can handle billions of operations every second. However, they have limits when faced with very complex problems, like large simulations or complicated calculations.

3. Deterministic Nature

Classical computers operate in a predictable way. This means that if you input the same data, you will always get the same result. This reliability is useful for everyday tasks like word processing, browsing the web, and managing databases.

4. Applications

Classical computers are great for tasks like calculations, data processing, and running software applications. They are widely used in homes, schools, and businesses. Industries such as finance, healthcare, and education rely on classical computers for data analysis and decision-making.

5. Limitations

Despite their effectiveness, classical computers struggle with certain problems. For example, tasks like factoring very large numbers or simulating complex molecules can take an impractically long time. This challenge has led researchers to explore new computing methods, including quantum computing.

Quantum Computing

Quantum computing uses the principles of quantum mechanics to process information in a completely different way. This new type of computing has the potential to solve problems that classical computers find very difficult.

1. Quantum Bits (Qubits)

In quantum computing, the basic unit of information is called a qubit. Unlike classical bits, qubits can represent both 0 and 1 at the same time due to a property called superposition. This ability allows quantum computers to process an enormous amount of information all at once.

2. Entanglement

Another important feature of quantum computing is entanglement. When qubits are entangled, the state of one qubit can depend on the state of another, even if they are far apart. This connection allows quantum computers to perform complex calculations more efficiently.

3. Probabilistic Nature

Quantum algorithms work in a probabilistic way, meaning that the results can vary. When a quantum computer processes information, it can produce many possible outcomes. This allows it to explore multiple solutions at the same time, greatly increasing its power for certain tasks.

4. Applications

Quantum computers may excel at solving problems that are too hard for classical computers. Areas such as cryptography (security), drug discovery, and optimization are particularly likely to benefit. For example, quantum computers can factor large numbers much faster than classical computers, posing new challenges for current security systems.

5. Current State and Challenges

Although quantum computing is promising, it is still in the early stages of development. Researchers face several challenges, such as keeping qubits stable and correcting errors. Qubits are sensitive and can lose their quantum properties easily, making it difficult to build practical quantum computers.

Key Differences

| Feature | Classical Computing | Quantum Computing |

| Basic Unit | Bit (0 or 1) | Qubit (0, 1, or both at once) |

| Processing Power | Predictable and linear | Probabilistic and parallel |

| Speed | Limited by classical methods | Potentially much faster for certain tasks |

| Applications | General-purpose tasks | Special tasks like cryptography, AI, etc. |

| Nature of Operations | Sequential and consistent | Simultaneous and variable |

| Error Handling | Simple error correction methods | Complex error correction needed |

Conclusion

Classical computing is the foundation of our current technology, but quantum computing has the potential to change how we solve complex problems. As research continues, quantum technology could transform many industries and open new possibilities.

Understanding the differences between classical and quantum computing helps us see the future of technology. The shift from classical to quantum computing is not just about new machines; it’s about a new way of thinking about information itself.